Skylake uses a unified scheduling queue for both integer and floating point operations. Every cycle, the CPU potentially checks every scheduler entry to find something to send to the execution units. Traditionally, a micro-op sent to the backend is given a slot in the reorder buffer, scheduler, and some other queues depending on what exactly it does.

AMD’s Non-Scheduling FP Queue: A Clever Approach That’s a 17.4% fewer wasted cycles, in Zen 2’s favor. Per 1000 instructions, Skylake loses 96.75 cycles minimum to mispredicts while Zen 2 loses 82.4. Agner Fog measured Skylake’s branch mispredict penalty to 15-20 cycles.

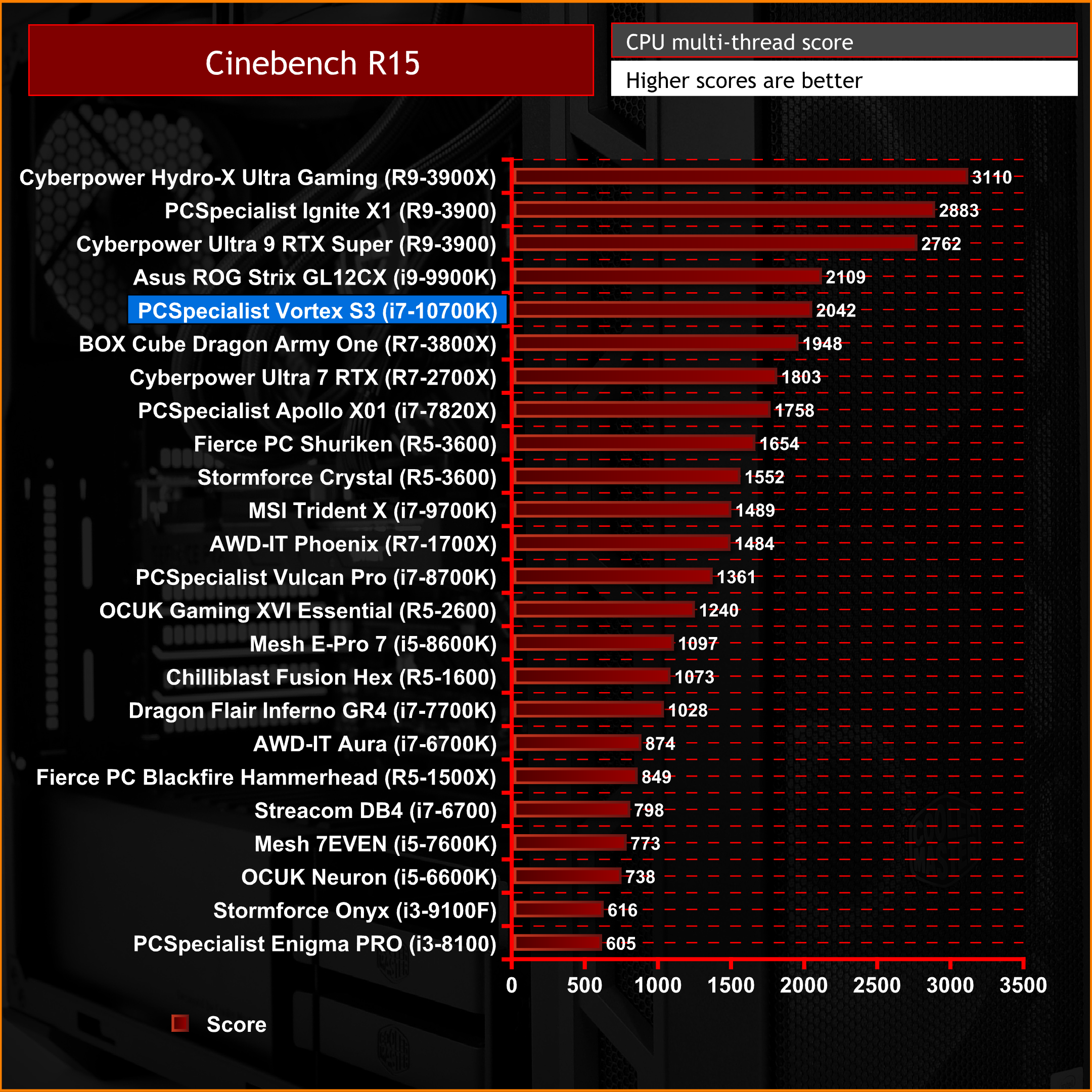

#Cinebench results manual

Zen 2’s optimization manual states that the mispredict penalty “is in the range from 12 to 18 cycles” while the “common case penalty is 16 cycles”. We can also estimate how many cycles were wasted due to mispredicts. Both CPUs have about a 1:1 micro-op to instruction retired ratio in this test, so the difference isn’t explained by either processor decoding instructions to more micro-ops. Skylake wastes 17% more frontend bandwidth than Zen 2. Here, Zen 2’s frontend delivered 1.39 ops per instruction retired, while the figure for Skylake is 1.63. Thus, more branch mispredicts will result in a higher op from frontend to instruction ratio. To be exact, Zen 2 gets 5.15 branch mispredicts every thousand instructions (MPKI), while Skylake gets 6.45 branch MPKI.Ī mispredict means the CPU is fetching down both the correct and incorrect path. Per-instruction, Skylake suffers 25% more mispredicted branches. Zen 2’s massive branch predictor achieves 96% average accuracy, beating Skylake’s 94.9% average. There’s a clear positive correlation here, for both CPUs. Cinebench R15 branch prediction accuracy, collected using performance counters for retired (mispredicted) branches Correlation between IPC and branch prediction accuracy. To keep the execution pipeline correctly fed, both Skylake and Zen 2 implement incredibly complex branch predictors that take significant die area. When the execution engine figures out the actual target doesn’t match the predicted one, it has to squash wrongly fetched instructions and wait for instructions to be delivered from the correct path. An incorrect guess means fetching down the wrong path, hurting performance. Branch Prediction: A Significant AMD Leadīranch predictors guess where to fetch instructions from without waiting for branch instructions to execute. Then, we can guess at how much a certain metric impacts performance. That lets us see if portions of CBR15 that have higher cache hitrates or lower mispredicts also have better IPC. Because we’re sampling performance counters at 1 second intervals, we can plot IPC versus various metrics. Over 40% of instructions access memory, with a roughly three times as many loads as stores.Įvery tile in CBR15 has different characteristics. Curiously, there are almost twice as many FP64 multiplies (6.51%) as FP64 adds (3.73%). Floating point calculations are dominated by FP64, with a bit of FP32 sprinkled in. Most executed SSE instructions are scalar, so the benchmark does not heavily stress the CPU’s vector units. Not all instruction categories are included here, and some categories overlap.ĬBR15 uses a lot of SSE instructions (41.7%), and doesn’t take advantage of AVX.

#Cinebench results software

Benchmark Overview Cinebench R15 instruction composition, collected using Intel’s Software Development Emulator (SDE). In short, Zen 2 pulls ahead thanks to its superior branch predictor, larger mid-level cache, and ability to track more pending floating point micro-ops in the backend. It can utilize all available CPU threads, but here we’ll be analyzing it in single thread mode. Cinebench R15 (CBR15) is a popular benchmark based on Cinema4D’s 3D rendering engine.

0 kommentar(er)

0 kommentar(er)